Everyone’s building AI agents.

But very few are building reliable ones.

In demo-land, AI agents look impressive: they can plan tasks, use tools, write code, and generate answers in seconds. But in production?

Most agents fall apart somewhere between 70% and 100% completion.

And fixing them feels like navigating a maze—one layer of abstraction at a time.

In our own development work—and from learning alongside builders across the AI community—we’ve seen a clear pattern emerge:

Reliability doesn’t come from more AI. It comes from better engineering.

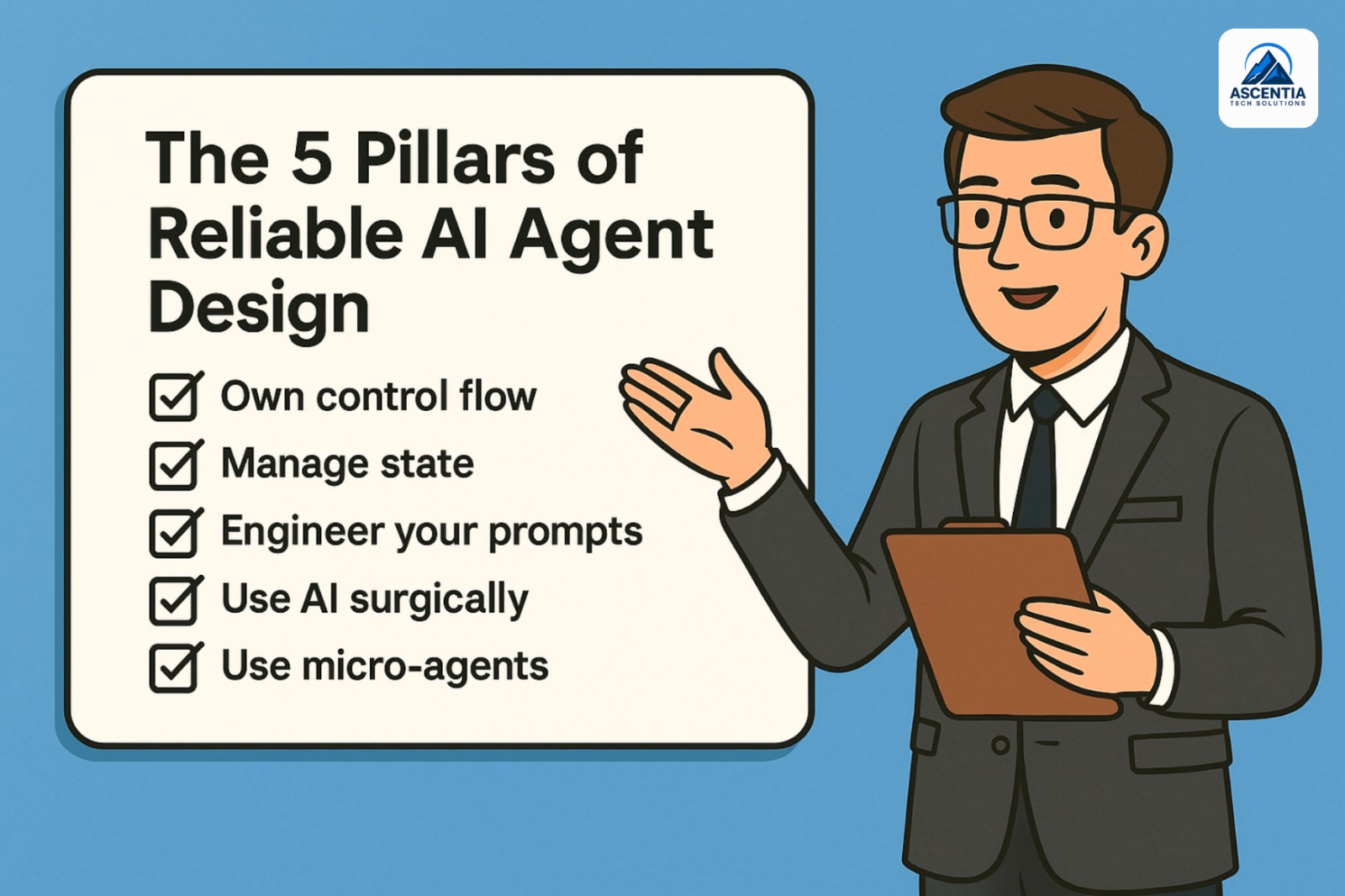

This post distills what we’ve learned into five foundational pillars. Whether you’re a startup, an enterprise team, or a solo dev, these principles will help you build AI agents that are trustworthy, testable, and production-ready.

Pillar 1: Own Your Control Flow

Let’s start here because most agent problems begin here.

The problem:

Many agent frameworks ask you to “just give the LLM a goal” and let it figure out the steps. That sounds magical—until it breaks. The agent loops endlessly, takes the wrong step, or spirals into hallucination. And you can’t trace what went wrong.

The fix:

You control the logic. The LLM just makes decisions within that logic.

Think of your system as a directed graph:

- Each node is a decision or tool invocation.

- The agent picks the next node—but only from defined options.

- You handle exit conditions, retries, and transitions explicitly.

You’ve written this before. It’s a while loop. It’s a switch statement. It’s flow control—basic software 101. LLMs don’t replace your logic. They enhance it—when you stay in the driver’s seat.

By owning your control flow, you make your system predictable, modular, and auditable. And that means fewer fires to put out when things scale.

Pillar 2: Manage State Outside the Agent

Agents are not magical, stateful beings.

They are stateless functions: input → output.

❌ What goes wrong:

When frameworks try to manage state inside the agent, things get messy:

- Errors accumulate and confuse future steps.

- Tool calls get lost between retries.

- Context gets bloated with irrelevant history.

✅ What works:

Move state out of the agent loop and into your system.

Track two kinds of state:

- Execution state — current step, retries, pending actions

- Business state — user inputs, messages, approvals, data payloads

Store these in a database, Redis, or your existing backend logic.

This lets you:

- Pause and resume workflows

- Handle human-in-the-loop steps

- Serialize and audit every decision

- Retry failed steps intelligently

When you own the state, agents become modular helpers, not mysterious operators.

Pillar 3: Engineer Your Prompts with Precision

This is where most people underestimate the craft.

❌ The mistake:

Copy-pasting prompts from open-source tools or ChatGPT playgrounds. Hoping that “just describing what you want” is enough.

But LLMs don’t understand intent—they understand tokens.

✅ The truth:

Prompts are code. Every token matters.

If you want consistent, accurate, and reliable behavior from an agent, you must:

- Write and test prompts deliberately

- Strip unnecessary fluff

- Summarize past context clearly

- Handle errors gracefully and compactly

- Control token usage for better performance

One well-crafted prompt can outperform an entire framework full of boilerplate.

And when prompts are versioned, tested, and owned by you, debugging gets 10x easier.

This is context engineering—the most underrated skill in building agents that actually work.

Pillar 4: Use AI Only Where It Adds Value

AI is powerful. But too much AI is like too much seasoning—it overwhelms the dish.

❌ The trap:

Trying to replace entire workflows with LLMs. Letting them make every decision, call every tool, and even manage retries.

That’s not smart automation. That’s a mess waiting to happen.

✅ The better way:

Start with deterministic logic. Sprinkle AI only where it helps.

Here’s where LLMs shine:

- Turning unstructured text into structured data (e.g., JSON)

- Interpreting ambiguous instructions

- Generating flexible content (e.g., emails, summaries, names)

- Making suggestions where logic fails

Everything else—loops, error handling, retries, approvals—belongs in your code. Think of the LLM as a skilled assistant, not a replacement for your backend.

This hybrid approach delivers speed, scale, and safety.

Pillar 5: Use Micro-Agents Instead of Mega-Agents

If your agent has 20+ steps, handles 10 tools, and tries to solve world peace—it’s not an agent. It’s an architectural hazard.

❌ What breaks:

- The agent loses context.

- The prompt becomes too long or ambiguous.

- Debugging becomes impossible.

- One failure brings the whole thing down.

✅ What works:

Break large workflows into small, focused micro-agents.

Each micro-agent should:

- Have a clear job (e.g., “decide deployment order”)

- Require minimal context

- Return results to your orchestration logic

This keeps each piece testable, composable, and manageable.

💡 Real example:

At HumanLayer, their CI/CD bot handles deployments like this:

- The pipeline runs deterministically until it needs input

- A micro-agent proposes the next deployment step (e.g., “deploy frontend”)

- A human approves or adjusts

- The system continues

Small, specialized agents play nicely with humans and software. That’s where reliability lives.

Build Agents Like Software, Not Sci-Fi

Here’s the bottom line:

- AI agents aren’t magic

- LLMs don’t understand workflows—they generate tokens

- Frameworks can help, but they can also hide important decisions

What makes your AI reliable is not the model—it’s the engineering around it.

When you:

- Own the control flow

- Own the state

- Own the prompts

- Use AI surgically

- Break things into micro-agents

…you get a system that scales with confidence.

Let’s Talk AI Agents That Actually Work

Whether you’re building internal tools, customer assistants, or automation agents, we can help you:

- Audit your current agent setup

- Design smarter prompts and flows

- Migrate from fragile frameworks to sustainable systems

Want more insights like this—straight to your inbox?

We share practical tips, real-world use cases, and fresh thinking on how to use AI, automation, and analytics to grow smarter.

Subscribe to our newsletter and stay up to date with what we’re learning and building at Ascentia.